- #How to install spark from source code how to#

- #How to install spark from source code update#

- #How to install spark from source code code#

- #How to install spark from source code download#

#How to install spark from source code download#

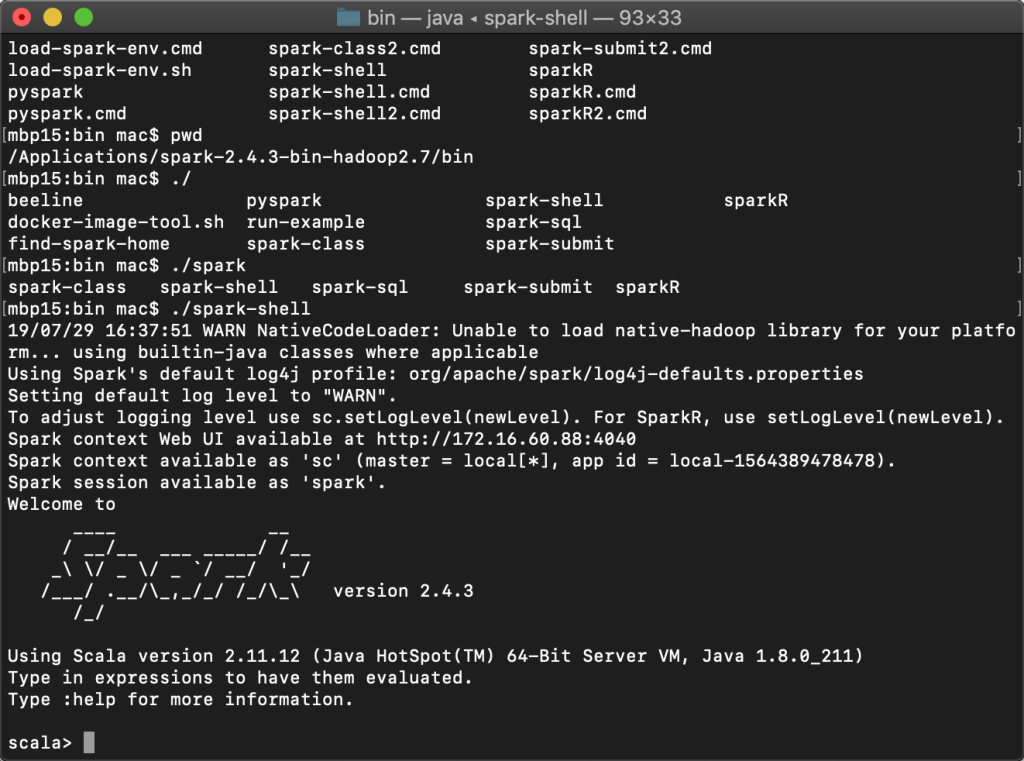

On the Spark downloads page, choose to download the zipped Spark package pre-built for Apache Hadoop 2.7+. The pre-built package is the simplest option.

spark is not recognized as an internal or external.

#How to install spark from source code code#

#How to install spark from source code how to#

The different components of Jupyter include:īe sure to check out the Jupyter Notebook beginner guide to learn more, including how to install Jupyter Notebook.Īdditionally check out some Jupyter Notebook tips, tricks and shortcuts. Jupyter Notebook has support for over 40 programming languages, with the most popular being Python, R, Julia and Scala. Jupyter notebooks an be converted to a number of open standard output formats including HTML, presentation slides, LaTeX, PDF, ReStructuredText, Markdown, and Python. The actual Jupyter notebook is nothing more than a JSON document containing an ordered list of input/output cells. New code examples in category Shell/Bash. From the menu bar, navigate to View > Extensions. Jupyter Notebook is a web-based interactive computational environment in which you can combine code execution, rich text, mathematics, plots and rich media to create a notebook. Are there any code examples left Find Add Code snippet. Apache Spark for Big Data Analytics and Machine Learning is available now (link below). After you meet the prerequisites, you can install Spark & Hive Tools for Visual Studio Code by following these steps: Open Visual Studio Code. Choose a package type and either download directly or select a mirror. As of this writing, Spark's latest release is 2.1.1. The first order of business is to download the latest source from Select Source Code from option 2. This Apache Spark tutorial is a step by step guide for. We’ll use apt-get to install git’s dependencies: apt install build-essential dh-autoreconf libcurl4-gnutls-dev libexpat1-dev gettext libz-dev libssl-dev -y. The release of Spark 2.0 included a number of significant improvements including unifying DataFrame and DataSet, replacing SQLContext and HiveContext with the SparkSession entry point, and much more. This tutorial describes the first step while learning Apache Spark i.e. When installing a package from source code, you’ll need to manage the installation of the package dependencies. Spark provides an interface for programming entire clusters with implicit data parallelism and fault-tolerance.

#How to install spark from source code update#

forPath ( "/tmp/delta-table" ) // Update every even value by adding 100 to it deltaTable. Import io.delta.tables.* import .functions import DeltaTable deltaTable = DeltaTable.

0 kommentar(er)

0 kommentar(er)